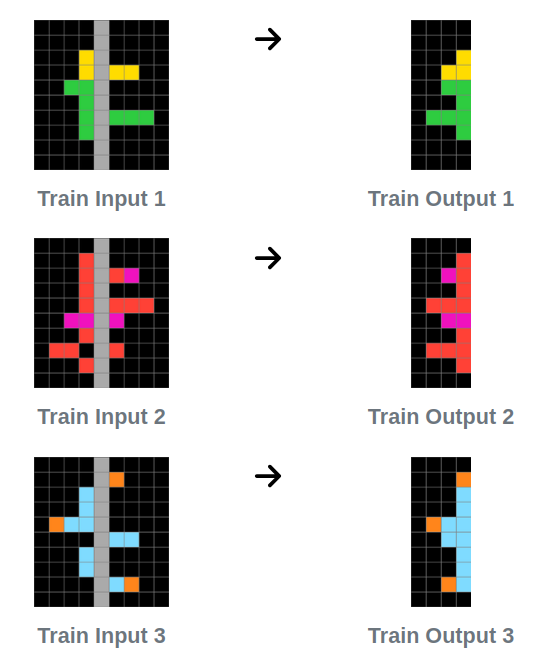

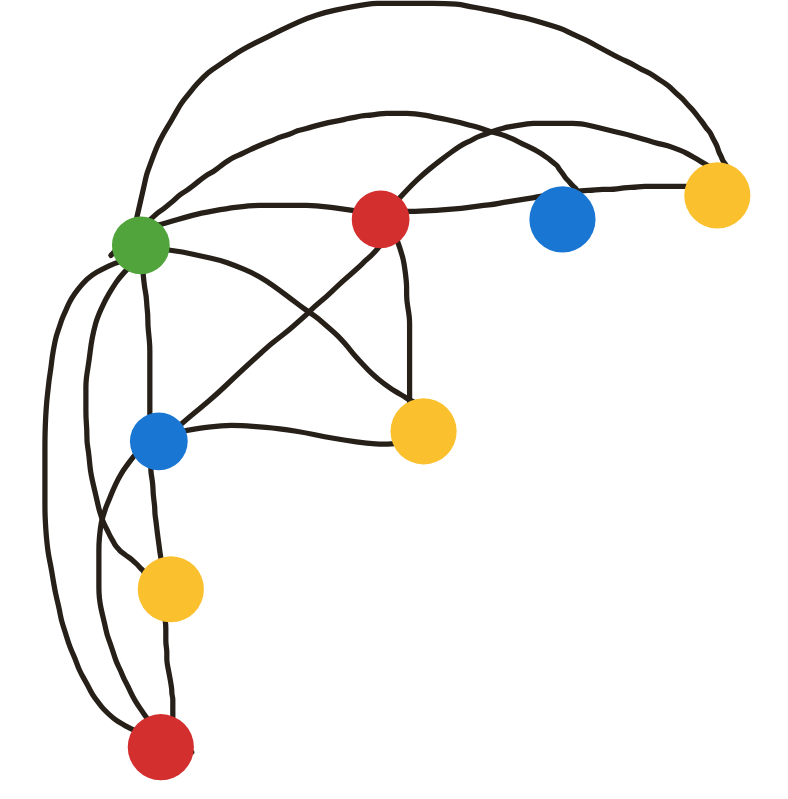

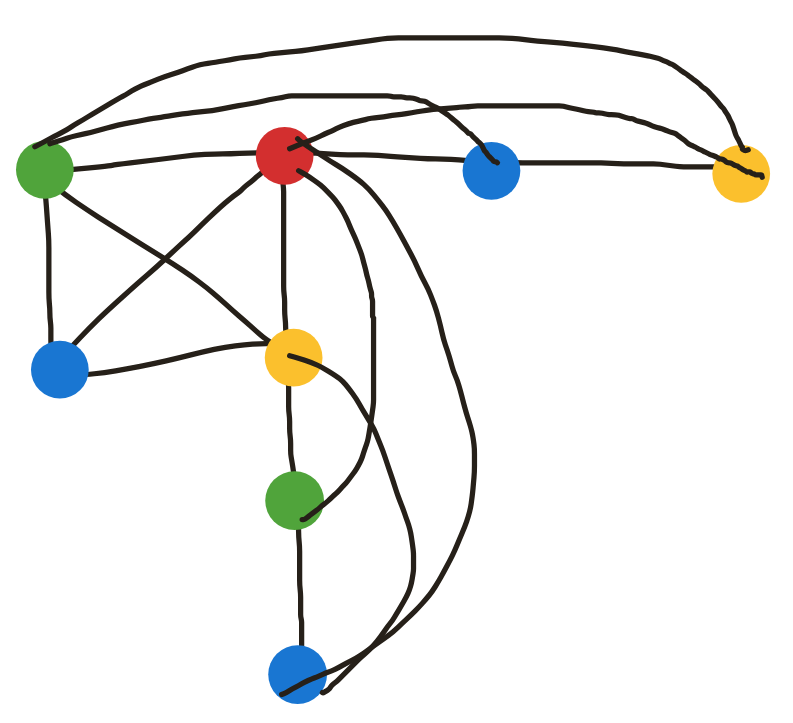

<!-- Overwrites defaults from reveal_config.yaml and mkslides.yml --> <!-- <style> </style> --> <style> .reveal p { line-height: 1.1; margin: var(--r-block-margin) 0; /* text-align: center; */ } red{color:red; data-background-color} green{color:green; data-background-color} /*<animate> tag does NOT work yet */ animate{.element .fragment data-auto-animate} </style> <!-- ## Hierarchical <red>Reasoning</red> <green>Model</green> --> # Hierarchical # <red>Reasoning</red> Model <br> <br> ## HRM[<sup>0</sup>](#references) <br> <br> <small><emphasis><strong>ESC</strong> or <strong>O</strong> for overview (site map)</emphasis></small> Notes: Can it be called reasoning? Step-by-step reasons for actions intuited all at once Open loop beam search on token embeddings instead of logic? --v-- ## Shoutouts <table> <th><td> </td><td> </td></th> <tr><td><a href="https://www.linkedin.com/in/ACoAACshDjQBj207zyMqyjlZ-nkK4F0Vp-zOnes">Sai Paladugula</a></td><td>debugging and refactoring HRM</td></tr> <tr><td><a href="https://tangibleai.com/">Tangible AI</a></td><td>coffee</td></tr> <tr><td><a href="https://hiredcoach.ai/">Keith Chester</a></td><td>RL talk, ARC analysis</td></tr> <tr><td><a href="linkedin.com/in/adam-econ-smith">Adam Smith</a></td><td>Reasoner talk</td> </table> Notes: - Sai: ancient 10yo GPUs LinkedIn - chester: links to hiredcoach.ai - Keith: [HRM analysis by Chollet](https://arcprize.org/blog/hrm-analysis) - Adam Smith: [AZR talk](https://docs.google.com/presentation/d/18ojo6MQrTIBnFCCI6uch1mGzV4hV_d_snDU6WNT1Fpc/edit?slide=id.g37673fa31e1_1_309#slide=id.g37673fa31e1_1_309) --- ## Summary #### Hierarchical Reasoning Model is claimed to be - Neuromorphic (hierarchical) - Smaller - Faster (selective backprop, like LSTM) - Better (at reasoning) Note: Nested transformer --v-- ## Neuromorphic <a href="hrm/neuromorphic-hierarchy.png"> <img src="hrm/neuromorphic-hierarchy.png" alt="Low level and High Level reasoning shown as successive blocks with feedback loops in feedforward direction." height=768 /> </a> Note: - input at bottom output at top - everything else is wrong - the other feedforward loops are completely wrong - output should be shown to be concatenated - blocks should be in parallel with a z^-60 delay line on LL --v-- ## Corrected typos <a href="hrm/HRM-nested-loops.drawio.svg"> <img src="hrm/HRM-nested-loops.drawio.svg" alt="nested feedback loop with corrected arrow directions showing feedback looping around to the inputs for both HL and LL transformers." height=768 /> </a> Note: - both HL&LL are encoder-only transformers - same architecture (4 Transformer layers) - 4x(Attention + SwiGLU) - same embedding "hidden_size" (512) --v-- <a href="https://i0.wp.com/www.diygenius.com/wp-content/uploads/2022/12/brainwave-frequencies-chart.jpg"> <img src="hrm/brainwave-frequencies-chart.jpg" alt="5 sine waves of decreasing frequence for brainwave states. Gamma: peak insight experiences. Beta (65Hz): Alert, focused thinking. Alpha: Meditation, relaxed creativity. Theta (): Visualization, trance, sleep. Delta: deep sleep" height=768 /> </a> <sup>[DIYgenius.com](https://www.diygenius.com/the-5-types-of-brain-waves/)</sup> --v-- <a href="hrm/unrolled-in-time.png"> <img src="hrm/unrolled-in-time.png" alt="Two low-level 4-layer transformers are fed forward for every one high level ." height=768 /> </a> --v-- ## Smaller ### size matters "Text compression and AI are equivalent problems" -- Marcus Hutter <br> - [Hutter Prize](http://prize.hutter1.net/) 5000€ per % - Grand prize: €0.5M for 100 1% improvements (9x -> 24x) - Compression ratio == AI? - Semantically lossless? Literally lossless? --v-- ## Hutter prize enwik8 (100MB) Author | Date | Algo | Total Size | Compr | % | Award | --------------------- | ---- | -------- | ---------- | ----- |------ |------ | Matt Mahoney | 2006 | paq8f | 18,324,887 | 5.46 | | | Alexander Rhatushnyak | 2006 | paq8hp5 | 17,073,018 | 5.86 | 6.8% | €3.4k | Alexander Rhatushnyak | 2007 | paq8hp12 | 16,481,655 | 6.07 | 3.5% | €1.7k | Alexander Rhatushnyak | 2009 | decomp8 | 15,949,688 | 6.27 | 3.2% | €1.6k | Alexander Rhatushnyak | 2017 | phda9 | 15,284,944 | 6.54 | 4.17% | €2k | <br> - Only modest progress - Only Marcus Hutter funding Note: - for 20 years only Marcus Hutter has been willing to fund this research - because of his understanding of "lossless" compression of text - lossless semantic "compression" of text would be AGI --v-- ## Hutter prize enwik9 (1GB) Author | Date | Algo | Total Size | Comp. | % | Award | ------------------ | ---- | --------- | ------------ | ----- | ----- | ----- | Alex Rhatushnyak | 2019 | phda9v1.8 | 116,673,681 | 8.58 | | | Artemiy Margaritov | 2021 | starlit | 115,352,938 | 8.67 | 1.1% | €9k | Saurabh Kumar | 2023 | fast cmix | 114,156,155 | 8.76 | 1.04% | €5k | Kaido Orav | 2024 | fx-cmix | 112,578,322 | 8.88 | 1.38% | €7k | Orav and Knoll | 2024 | fx2-cmix | 110,793,128 | 9.03 | 1.59% | €8k | You? | 2025 | ???? | <109,685,197 | >9.12 | >1% | >€5k | <br> - Scaled size, but not **meaning**! - What about lossless **semantic** compression? --v-- ## Sudoku 9x9 compression <br> #### <5B asymmetric solutions #### / #### 27M parameters #### = #### 200x <br> But, puzzle samples are not IID Notes: - Intentionally target 1000 puzzles - Sudoku Extreme (human ratings for difficulty) - No redundant clues - But expand again by 10x for bytes in a solution --v-- ## Better (meaningfulness matters) <a href="hrm/accuracy-bar-plot.png"> <img src="hrm/accuracy-bar-plot.png" alt="ARC-1 accuracy: HRM: 40% (Chollet got 32%), ARC-2: 5% (Chollet got <2%), Sudoku 55%, 30x30 Maze: 74%." height=768 width=2048 /> </a> --v-- ## Reasoning, the process of ### devising and ### executing complex ### goal-oriented action sequences, remains a critical challenge in AI.<sup>1</sup><sup>,</sup><sup>2</sup> Notes: Great opener. Much better than - "It was a dark and stormy night." - "Call me Ishmael." - [^1]: 2016 Goodfellow textbook -- Transformer performance on Sudoku - [^2]: 2015 He -- Deep Resid. Learning for Image Recognition -- 152-layer ResNet acheived 96.4% on ImageNet If the paper addresses the reasoning challenge it could be one of the 2 or 3 superintelligence AGI breakthroughs we're all anticipating. --v-- **Translation:** <br> <br> ### Inductive reasoning is the process of ### learning to write and ### execute ### functions[<sup>5</sup>](#references)<sup>,</sup>[<sup>6</sup>](#references) Notes: - that's just inductive reasoning, machine learning - the "execute" part must refer to test-time compute - Francois Chollet call this "generating programs" that solve ARC tasks - "On the Measure of Intelligence" - So HRM should be good at generating code if it can solve ARC - makes this an important and viral paper --v-- ## Reasoning <br> #### Deductive _program_ ``+`` _inputs_ ``=`` deduce **[outputs]()** **[y]()** = _f(x)_ <br> #### Abductive _program_ ``+`` _outputs_ ``=`` abduce (guess) **[inputs]()** __y__ = __f__(**[x]()**) <br> #### Inductive __y__ = **[f]()**(__x__) _inputs_ `` + `` _outputs_ `` = `` induce **[algorithm]()** Notes: - shout-out to Adam Smith for his slides & discussion on this - deductive: instruction following, agentic reasoning - abductive: "reasoning backwards or analytically... dear Watson" - inductive: HRM = pattern recognition or ML, algorithm learning, program gen --v-- ## Inductive reasoning examples - Abstract Reasoning Corpus (ARC-1) - Mazes - Sudoku Puzzles Note: - Logical reasoning ~= graph search --v-- ## Code review - `models.utils.`**functions** module - Single 3-line function - Imports config yaml files by name - Only used once - Hiearchy of Pydantic objects - convert dictionaries into typed Config classes - only used once - difficult to trace to know what default config actually is - Pytorch models mix functional and OO layers - difficult to keep track of dimensions (no dimension comments) - batch_first=`True`? `False`? - difficult to count parameters - wandb - no saved checkpoints or human-readable logs (except tracebacks) Note: - Impressed w/ modularity and sophistication - But when I tried to trace hyperparameters & count learned parameters --v-- ### Deep learning, ... ### stacking more layers to achieve ### increased representation power and ### improved performance --v-- ## Deep learning enables the learning of ### more meaningful and ### more precise ### representations (abstractions) --- ## ARC-1 [arc-visualizations.github.io](https://arc-visualizations.github.io/)  Note: - branching factor: 30x30x10 = ~10k - max depth: ~1000 - [On the measure of intelligence by Francois Chollet](https://arxiv.org/pdf/1911.01547) --v-- ## Maze #### Wall-following <!--  --> <a href="hrm/wiki-maze-wall-following.svg"> <img src="hrm/wiki-maze-wall-following.svg" height=768 width=2048 alt="Animation of maze traversal algorithm execution using wall-following algorithm using the right-hand rule. The red dot remains adjacent to the right hand wall as it navigates through a maze from the top left entrance hole to the bottom right exit hole. The maze has no islands or walls that are detached from the exterior wall, otherwise, the algorithm could fail to find an exit." /> </a> Notes: - useful for IRL navigation (unlike HRM) - does not require birds-eye view - not useful IRL navigation (HRM more useful) - can fail for mazes with "islands." - can fail to find shortest path. - can fail for start and goal within the maze --v-- ## Sudoku ### Intuitive "inductive reasoning"  Solutions require 15k - 1M cycles Note: - Brute force (depth-first search or backtracking) in seconds - Difficulty doesn't affect runtime significantly - Humans augment brute force or stochastic algorithms by first filling easy/obvious values --v-- ## Architecture + 512D embedding vector + Attention Block<!-- .element: class="fragment" --> + Attention 2M + SwiGLU (MLP) 4M<!-- .element: class="fragment" --> * x4 Attention Blocks <br> = 27M <!-- .element: class="fragment" --> --v-- ## Sudoku "Compression" by HRM 27M --v-- ## Meaningfulness matters <a href="hrm/accuracy-bar-plot.png"> <img src="hrm/accuracy-bar-plot.png" alt="ARC-1 accuracy: HRM: 40% (Chollet got 32%), ARC-2: 5% (Chollet got <2%), Sudoku 55%, 30x30 Maze: 74%." height=768 width=2048 /> </a> Notes: - Lossless compression - Semantic accuracy - Semantic completeness --v-- #### HRM meaningfulness | | | | ----------- | ----------- | | Header | Title | | Paragraph | Text | ARC-1 accuracy: HRM: 40% (Chollet got 32%), ARC-2: 5% (Chollet got <2%), Sudoku 9x9 55%, 30x30 Maze: 74%. - Sudoku: ```python >>> solutions = 6.67e21 >>> symmetries = (9) >>> ``` - 6.67×10<sup>21</sup> solutions - 5B asymmetric solutions - / **27M** Notes: 5B nonhomomorphic solutions would have to be learned Why does everyone care about compression ratio? It's a measure of generalization or capacity or intelligence? --v-- ## LLM compression #### 10x-100x compression - Llama - Llama 2: 2T / 70B = 30x - Llama 3.2: 9T / 90B = 100x - Claude 3 - Sonnet: 2T? / 70B = 30x - Opus: 2T? / 2T? ~= 1x Notes: - You can think of LMs as compression algorithms or fuzzy databases - Compression ratio as compression performance (intelligence) benchmark? - Assumes 100% semantic reproduction accuracy (human can't tell the difference) - Compression ratios to measure intelligence - Chat GPT compresses a large chunk of the public Internet into --v-- ## SAI - Specialized AI #### Symbolic AI #### GOFAI - HRM = More efficient DL - HRM < Reasoning < AI < AGI Note: More efficient DL? Learns an algorithm or function for one specific problem Can solve 9x9 sudoku problems after training on many examples Would have to retrain on 4x4 sudoku or each new class of ARC prize puzzle Not AGI or LLM AI Learns one algorithm at a time --v-- ## AC^0 ⊆ TC^0 ### Transformers Fixed-depth Notes: - HRM says increased Transformer depth _can_ improve Sudoku performance but not optimal. --v-- ## TC^2 ### RNN Polylogarithmic depth [^9] LLM scaling Note: BPTT makes training problematic (vanishing or exploding gradients) Recursiveness makes it computationally expensive (polylogarithmic depth) --v-- ## complexity (expressivity) hierarchy NC^i ⊆ TM ⊆ AC^i ⊆ ACC^i ⊆ TC^i ⊆ NC^i+1 NC = AC = ACC = TC - TC: Threshold circuit - ACC: AC w/ Counting - AC: Alternating (AND/OR) circuit - NC: Nick's class Notes: 10↑↑15=10^10^10^...^10, an exponential tower of 15 tens). --v-- ## NC^i Poly logarithmic time on parallel architecture with polynomial number of processors. (Horizontal scaling ) Stephen Cook named them after Nick Pippenger's life's work in polylogarithmic x polynomial size networks - int + * - / - matrix * det inv rank - polynomial GCD, with Sylvester matrix - Maximal matching nteger addition, multiplication and division; Matrix multiplication, determinant, inverse, rank; Polynomial GCD, by a reduction to linear algebra using Sylvester matrix Finding a maximal matching. It's unknown if NC is strict subset of --v-- ## TC^0 Constant depth polynomial size Threshold Circuit Transformer (ignores the width) --v-- ## AC^0 Constant depth Alternating Circuit Conjunctions of Disjununctons of) Conjunctions of Disjunctions of... O(log^i(N)) depth ## Minsky https://en.m.wikipedia.org/wiki/Perceptrons_(book)#/media/File%3APerceptrons_(book).jpg Symbolic ai winter 70-89 book cover along side hrm maze And aima search Minsky loudly debated rosenblatt (Brooklyn) and quelled neural net (perceptron) research until 89 Playgound.Tensorflow.org --- ## Maze #### Trémaux <!--  --> <a href="hrm/wiki-maze-tremaux.gif"> <img src="hrm/wiki-maze-tremaux.gif" alt="Trémaux's algorithm. The large green dot shows the current position, the small blue dots show single marks on entrances, and the red crosses show double marks. Once the exit is found, the route is traced through the singly-marked entrances. Note that two marks are placed simultaneously each time the green dot arrives at a junction. This is a quirk of the illustration; each mark should in actuality be placed whenever the green dot passes through the location of the mark." height=768 width=668 /> </a> Notes: - Like HRM: allows islands and start-stop within max - Better than HRM: local knowledge only --- ## Sudoku == graph coloring Example 4x4 Sudoku subgraph Current node 1,1: <strong style="color:green">green</strong>  Notes: - current nodes is top left green circle - neighbors shown --v-- ## Sudoku 4x4 graph coloring Current node 1,2: <strong style="color:red">red</strong>  Notes: - current nodes is top row, 2nd column, green circle - neighbors shown --v-- ## sudoku 4x4 Graph/map coloring problem with 8m 4 fully connected rings with --- ## Sudoku ### 9x9 complexity ``` ~7BBt (6.67 × 10^21) solutions ~ 5B (5.47 × 10^9) asymmetric solutions ``` ### 9x8 symmetries * 9! permutations * 9! relabelings * 4 reflections * 4 rotations --v-- ## Difficult variants ### Killer Sudoku Sudoku + Kakuro [](https://en.wikipedia.org/wiki/Sudoku) --v-- ## Sammari Sudoku - Gattai 5 ("5 merged") - Quixnux shape  - 9×9×5 - 4×3×3 - Five 9×9 grids - Overlap 4 corners --v-- ## Sudoku 9x9 complexity 16x8 branching factor and depth of 9 (diagonal) 9×9 Sudoku solution grids is 10 ^21 Notes: The number of essentially solutions, when [symmetries](https://en.m.wikipedia.org/wiki/Symmetries "Symmetries") such as rotation, reflection, permutation, and relabeling are taken into account, is much smaller, 5,472,730,538.[[30]](https://en.m.wikipedia.org/wiki/Sudoku#cite_note-30) --- ## References - [^0]: 2025 Guan Wang et al Hierarchical Reasoning Model (https://arxiv.org/pdf/2506.21734) - [^1]: 2016 Goodfellow -- Ian Goodfellow, Yoshua Bengio, and Aaron Courville. _Deep Learning_ - [^2]: 2015 He -- Deep Resid. Learning for Image Recognition -- 152-layer ResNet acheived 96.4% on ImageNet - [^3]: 2023 Strobl Aug -- hard attention net (transformer) - [^4]: 1991 Bylander, U. Ohio + DARPA - [^5]: 2019 Francois Chollet [On the Measure of Intelligence](https://arxiv.org/pdf/1911.01547) - [^6]: 2025 Andrew Zhao et al [Absolute Zero Reasoner](https://arxiv.org/pdf/2505.03335) - [^7]: 2019 Alexandre Legros et al [Effects of a 60 Hz Magnetic Field Exposure Up to 3000 μT on Human Brain Activation as Measured by Functional Magnetic Resonance Imaging](https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0132024) - [^30]: 2008 Peter D Grunwald and Paul M.B. Vitanyi [Algorithmic Information Theory](https://arxiv.org/pdf/0809.2754) textbook - [^55]: 1990 Ming Li [Kolmagorov Complexity and its applications](https://core.ac.uk/download/pdf/301633813.pdf) textbook - [^2022Merrill]: 2022 Merrill proved transformer recognizes TC^0 languages - [^10]: [prize.hutter1.net](http://prize.hutter1.net/) --- ## Trial and error reasoning model <br> <br> ### TERM <br> #### [Hobson Lane](#references) --v-- # Questions? ### Hobson Lane - [TangibleAI.com](https://tangibleai.com) - [NLPIA.org](https://nlpia.org) - [GitLab @hobs](https://GitLab.com/hobs) Notes: Thank you! I got a lot out of this.